Project Summary

Recent hardware innovations have produced low-powered, embedded devices (also known as motes) which can be equipped with small cameras and that can communicate with neighbouring units using wireless interfaces. Examples of these devices are the CITRIC motes and all the different types of smartphones (such as the Apple iPhone). These devices can organize themselves in a "Smart Camera Network" and represent an attractive platform for applications at the intersection of sensor networks and computer vision. In particular, flexibility is a key aspect of this technology: networks of motes or smartphones can be easily formed and expanded with the addition of new nodes on the fly.

The main challenge in this setting is that each node alone has limited power and computational capabilities. Standard computer vision algorithms (e.g., Structure from Motion, target tracking, object recognition, etc.) are centralized and are not suitable for direct deployement, because they would quickly exhaust the resources of a single node. In order to perform more complex and demanding tasks, the nodes therefore need to collaborate by means of distributed algorithms. Again, standard algorithms from the Sensor Network community (e.g. averaging consensus) cannot be directly employed, due to the special structures arising in computer vision applications.

In our research we aim to extend the algorithms from the Sensor Network and Control community to find distributed solutions for Computer Vision applications, on the following several particular issues:

The algorithms illustrated in this page and other algorithms for camera sensor networks are also covered in our tutorial paper, which appeared in the IEEE Signal Processing Magazine [8].

The main challenge in this setting is that each node alone has limited power and computational capabilities. Standard computer vision algorithms (e.g., Structure from Motion, target tracking, object recognition, etc.) are centralized and are not suitable for direct deployement, because they would quickly exhaust the resources of a single node. In order to perform more complex and demanding tasks, the nodes therefore need to collaborate by means of distributed algorithms. Again, standard algorithms from the Sensor Network community (e.g. averaging consensus) cannot be directly employed, due to the special structures arising in computer vision applications.

In our research we aim to extend the algorithms from the Sensor Network and Control community to find distributed solutions for Computer Vision applications, on the following several particular issues:

- Distributed averaging on manifolds: we have developed algorithms that, given individual estimates of a non-Euclidean quantity (e.g., the pose of an object) at each node, consistently compute their average in a distributed fashion. Our solution differs from previous work by explicitly taking into account the intrinsic structure of the manifolds. We have applied our algorithms to the problems of distributed object averaging and face recognition.

- Robust consensus in sensor networks: we have studied the problem of distributed robust optimization where the data for some nodes in the network are corrupted by outliers. Standard average consensus algorithm compute the global mean of the data as the point that minimizes the L2 norm of the distances from the measurements. We generalize this setting by considering other convex cost functions that are known to give averages that are robust to outliers, e.g., L1 norm, fair loss and Huber loss.

- Consensus averaging in presence of network packet losses: standard consensus algorithms assume that, at each iteration of the algorithm, the nodes perform symmetric updates, i.e., if node

i uses the data from nodej , then the converse will also be true. This assumption, however, is not always verified in practice, due to packet drop during network transmissions. As a result, in real-world networks, standard consensus algorithm converge to a value which could be different from the correct average of the measuerments. We proposed a scheme where we addcorrective iterations that guarantee the convergence to the correct average in spite of packet losses. - Camera network localization and calibration: when the field-of-view's of two cameras intersect, they can estimate their relative pose by using two-views epipolar geometry. We proposed algorithms that, given the noisy pose estimates between pairs of cameras, are able to consistently localize each camera in the network. Moreover, we propose algorithms that can also calibrate the internal parameters of the cameras by exploting the presence of a small number of already calibrated cameras in the network.

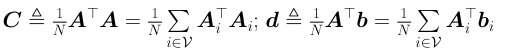

- Applications of classical consensus algorithms to build linear distributed algorithms for computer vision and machine learning: we show how distributed averaging algorithms can be used to solve distributed linear algebraic problems, such as solving a linear system or computing the Singular Value Decomposition of a matrix. We assume that each node has access to only a subset of the problem input (e.g., a few equations of a linear system or a few row of a matrix). Using these linear algebraic solutions one can then easily build algorithms for distributed problems in computer vision and machine learning, such as Principal Component Analysis (PCA), Generalized PCA (GPCA), linear 3-D point triangulation, linear pose estimation and Affine Structure from Motion.

The algorithms illustrated in this page and other algorithms for camera sensor networks are also covered in our tutorial paper, which appeared in the IEEE Signal Processing Magazine [8].

Consensus on manifolds

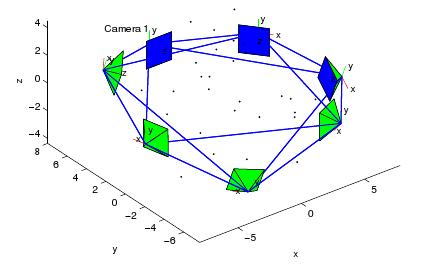

In this line of research our goal is to develop algorithms to distributely average quantities that lie on non-Euclidean manifolds. Of our particular interest is the case of pose estimation. We assume that the nodes are localized (meaning that each mote knows its position relative to its neighbours). We assume also that each node can estimate the pose of a common object (e.g. a face).

Figure: A network of cameras looking at the same object

Our goal is then to aggregate the estimates through a distributed averaging. In Sensor Networks, a popular class of algorithms for computing averages are consensus algorithms. These techniques are attractive because require communications only between neighbours and converge under mild network connectivity requirements. Unfortunately, these algorithms are designed for scalar, Euclidean quantities. On the other hand, poses are represented with a pair (R,T), where R is a rotation and T is a translation. Rotations, in particular, live on the manifold of the special orthogonal group SO(3). This means that the appropriate metric (dm in the Figure) has to be used, instead of the Euclidean one (de). This induces also a new concept of average, called Karcher mean. We have developed algorithms that:

Take into account the appropriate measure of distance and the corresponding concept of Karcher mean in SO(3).

Extend consensus algorithms to average quantities in SO(3).

Show convergence to the correct average.

Figure: Difference between the appropriate distance in the manifold and the Euclidean distance.

Figure: A network of cameras looking at the same object

Our goal is then to aggregate the estimates through a distributed averaging. In Sensor Networks, a popular class of algorithms for computing averages are consensus algorithms. These techniques are attractive because require communications only between neighbours and converge under mild network connectivity requirements. Unfortunately, these algorithms are designed for scalar, Euclidean quantities. On the other hand, poses are represented with a pair (R,T), where R is a rotation and T is a translation. Rotations, in particular, live on the manifold of the special orthogonal group SO(3). This means that the appropriate metric (dm in the Figure) has to be used, instead of the Euclidean one (de). This induces also a new concept of average, called Karcher mean. We have developed algorithms that:

Figure: Difference between the appropriate distance in the manifold and the Euclidean distance.

-

We have also applied our algorithm to a face recognition application, where each node estimates the pose of the face using Eigenfaces/Tensorfaces and then the various estimates are aggregated with our consensus algorithm on SO(3).

Figure: Our algorithm applied to face recognition. Each camera estimate the pose of the face and communicates with the neighbors to aggregate the estimates.

We used the space of rotations SO(3) as a particular example, but our algorithms can be defined on any Riemannian manifold for which the exponential map and the logarithm map can be implemented. We have also given sufficient conditions that guarantee the convergence of the algorithms. It turns out that such conditions depend on the curvature of the manifold, its topology and the topology of the network. For instance, we have shown that our Riemannian consensus algorithm has global convergence in manifold with negative curvature and in networks with linear topology.

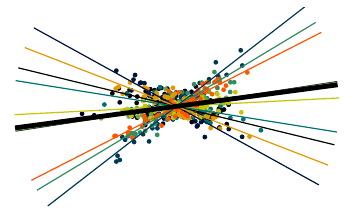

Consensus with Robustness to Outliers

One of the main drawbacks of the traditional consensus algorithms is their inability to handle outliers in the measurements. This is because they are based on minimizing a

Euclidean (L2) loss function, which is known to be sensitive to outliers. Thus, our objective is to propose a distributed optimization framework that can handle outliers in the measurements.

We have proposed a framework for generalization of consensus algorithms to robust loss functions that are strictly convex or convex. We pose the robust consensus problem as a constrained optimization problem, which we solve using a distributed primal-dual approach. More specifically, we try to separated the global Lagrangian function to a set of local Lagrangian functions with respect to each of the nodes in the network. We consider the problem into two classes with respect to the convexity of the cost functions.

Actually our derivation is based on general functions, but as we have considered the problem of distributed robust consensus where the measurements for some nodes in the networks are corrupted by large outliers, we have applied the framework to solving the average consensus and least-squares consensus problems in a robust fashion by using the class of convex functions such that the solution of which is known to be robust to outliers, e.g., L1 loss, fair loss and Huber loss. So far, the results showed that the proposed distributed algorithm converged to the optimal solution of the equivalent centralized optimization problem for most cases.

We have proposed a framework for generalization of consensus algorithms to robust loss functions that are strictly convex or convex. We pose the robust consensus problem as a constrained optimization problem, which we solve using a distributed primal-dual approach. More specifically, we try to separated the global Lagrangian function to a set of local Lagrangian functions with respect to each of the nodes in the network. We consider the problem into two classes with respect to the convexity of the cost functions.

- In the case of strictly convex loss functions, the Lagrangian function is naturally distributed, hence robust consensus can be solved using local optimization at each node plus a consensus-like update of the Lagrange multipliers. The classical average consensus algorithm based on the L2 loss shows up as a special case.

- In the case of non-strictly convex functions, we use an augmented Lagrangian approach that adds the square of the constraints to the cost function in order to make the problem strictly convex. However, the resulting augmented Lagrangian function is not naturally distributed. By introducing auxiliary variables and using a modified augmented Lagrangian approach, we still find a distributed solution, which involves solving a modified local optimization problem with an additional quadratic form plus the same consensus-like update of the Lagrange multipliers.

Actually our derivation is based on general functions, but as we have considered the problem of distributed robust consensus where the measurements for some nodes in the networks are corrupted by large outliers, we have applied the framework to solving the average consensus and least-squares consensus problems in a robust fashion by using the class of convex functions such that the solution of which is known to be robust to outliers, e.g., L1 loss, fair loss and Huber loss. So far, the results showed that the proposed distributed algorithm converged to the optimal solution of the equivalent centralized optimization problem for most cases.